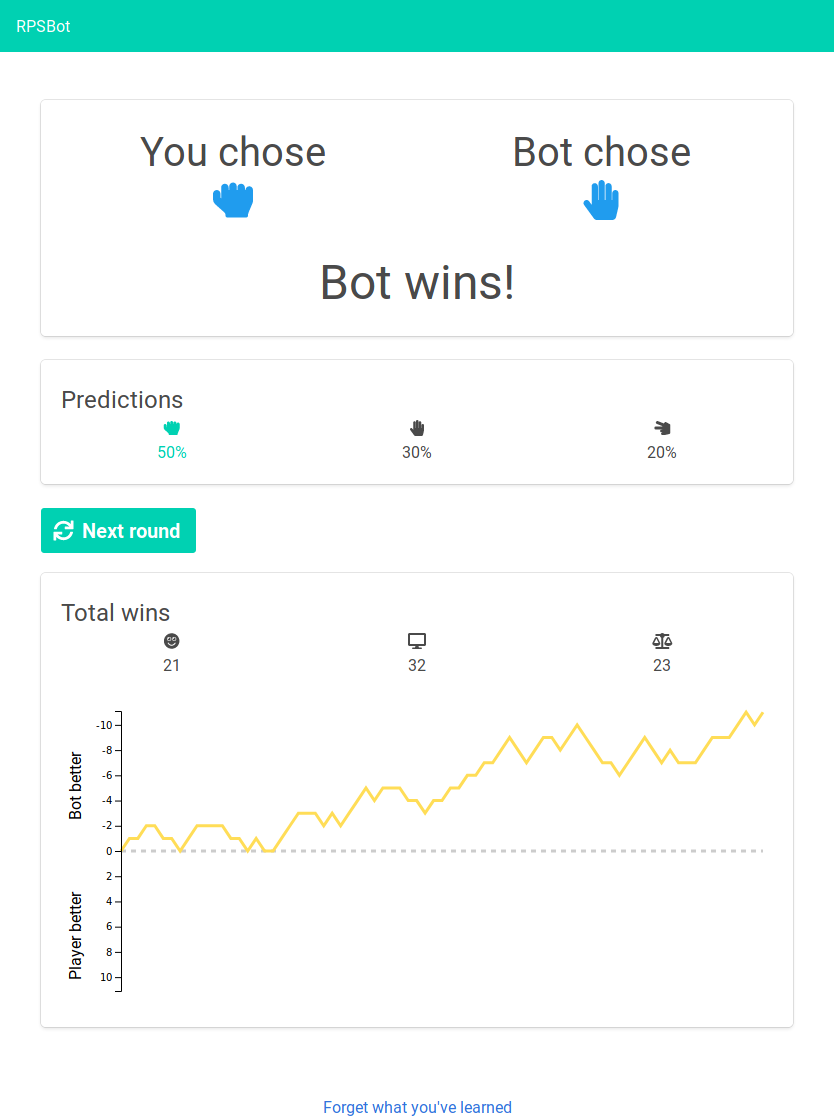

A bot that learns how you play rock-paper-scissors

As a small project, I wrote a bot for learning player patterns when playing rock-paper-scissors using a first-order Markov chain. This is definitely not the first time someone has had this idea, but I still thought I would give it a go.

My implementation simply models a combination of computer and player moves as one state. While playing, the observed states after every round are used to continuously update a matrix of state transitions to get better predictions of the future state.

By defining an outcome as +1 if the computer wins, -1 if the player wins and 0 if a draw occurs, the computer simply picks its move to maximize the expected outcome according to the current state predictions.

There are some ideas on how to get more fancy on the prediction model, but I am pretty happy with how well it performs after 30 rounds or so. Feel free to give it a try yourself.

The frontend of the game is built using Vue+Axios using the Bulma CSS framework with a bit of D3 sprinkled in for the success plot. The prediction backend uses Python, Flask and NumPy. Icons are from Font Awesome.